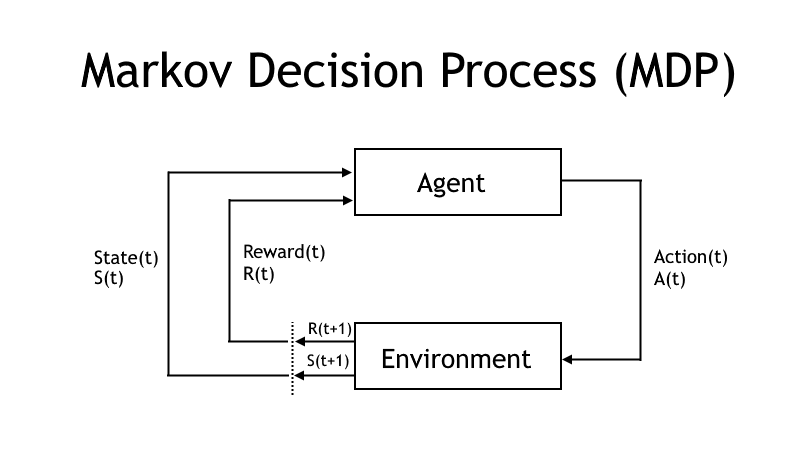

I am going to implement the SARSA (State-Action-Reward-State-Action) algorithm for reinforcement learning in this tutorial. The algorithm will be applied to the frozen lake problem from OpenAI Gym. SARSA is an algorithm used to learn an agent a markov decision process (MDP) policy.

SARSA is a passive reinforcement learning algorithm that can be applied to environments that is fully observable. SARSA uses temporal differences (TD-learning) to learn utility estimates when a transition occurs from one state to another. SARSA needs a policy/model that guides its actions, this policy/model is updated as an agent moves between states.

A SARSA agent uses a policy (on-policy algorithm) to interact with its environment, a decreasing exploration rate (epsilon) makes the agent more likely to explore in the beginning and more likely to follow the policy in the end. The agent updates its policy/model by applying a learning rate (alpha) and a discount factor (gamma). The learning rate determines how likely new knowledge is to replace old knowledge, a learning rate of 0 means no learning and a learning rate of 1 indicates that new knowledge is most imporant. The discount factor determines how important future rewards is, a discount factor of 0 means that current rewards is most important while a discount factor of 1 means that a long-time reward is most important.

Problem and Libraries

An agent should learn how to walk from an initial position to a goal position on a frozen lake, the frozen lake has holes and a slippery ground. The agent is rewarded 1 point if he reaches the goal and 0 points otherwise, the game is over if the agent reaches the goal or if he falls into a hole. The agent can move left, down, right or up. The movement direction is uncertain because of the slippery ground. The frozen lake problem is part of the gym library, i am also using the following libraries: os, math, random, pickle and numpy.

Red Marker in Command Prompt

The agent has a red background in the frozen lake problem. You need to add VirtualTerminalLevel and a value of 1 in the registry editor in HKEY_CURRENT_USER\Console if you want the red marker to show up in the command prompt on Windows 10.

Code

The code for the SARSA algorithm applied to the frozen lake problem is shown below. You can adjust parameter values to improve the performance of the agent. The policy/model is saved to disk after training and loaded from disk before training and evaluation.

# Import libraries

import os

import math

import random

import gym

import pickle

import numpy as np

# Get an action

def get_action(q_table, state, epsilon=1.0):

values = q_table[state, :]

max_value = max(values)

actions = [a for a in range(len(values))]

greedy_actions = [a for a in range(len(values)) if values[a] == max_value]

# Explore or get greedy

if (random.random() < epsilon):

return random.choice(actions)

else:

return random.choice(greedy_actions)

# Get a model

def get_model(env):

# Load a model if we have saved one

if(os.path.isfile('models\\frozen_lake.sarsa') == True):

with open ('models\\frozen_lake.sarsa', 'rb') as fp:

return pickle.load(fp)

# Create an empty model (Q-table)

return np.zeros((env.observation_space.n, env.action_space.n))

# Update the model (Q-table)

def update(model, current_state, next_state, reward, current_action, next_action, alpha=0.4, gamma=0.95):

model[current_state, current_action] = model[current_state, current_action] + alpha * ((reward + gamma * model[next_state, next_action]) - model[current_state, current_action])

# Exploration rate

def get_epsilon(t, min_epsilon, divisor=25):

return max(min_epsilon, min(1, 1.0 - math.log10((t + 1) / divisor)))

# Learning rate

def get_alpha(t, min_alpha, divisor=25):

return max(min_alpha, min(1.0, 1.0 - math.log10((t + 1) / divisor)))

# Train a model

def train():

# Variables

episodes = 1000

timesteps = 200

score = 0

total_score = 0

# Create an environment

env = gym.make('FrozenLake8x8-v0', is_slippery=True)

# Get a model (Q table)

model = get_model(env)

# Loop episodes

for episode in range(episodes):

# Start episode and get initial observation

current_state = env.reset()

# Get learning rate and exploration rate

alpha = get_alpha(episode, 0.1)

epsilon = get_epsilon(episode, 0.1)

# Get the first action (0:Left, 1:Down, 2:Right, 3:Up)

current_action = get_action(model, current_state, epsilon)

# Reset score

score = 0

# Loop timesteps

for t in range(timesteps):

# Perform a step

# Observation: position, reward: 0/1, done: True/False, info: Probability

next_state, reward, done, info = env.step(current_action)

# Get the next action

next_action = get_action(model, next_state, epsilon)

# Update the model

update(model, current_state, next_state, reward, current_action, next_action, alpha)

# Set current values as previous values

current_state, current_action = next_state, next_action

# Update score

score += reward

total_score += reward

# Check if we are done (game over)

if done:

print('Episode {0}, Score: {1}, Timesteps: {2}, Epsilon: {3}'.format(episode+1, score, t+1, epsilon))

break

# Close the environment

env.close()

# Save the model

with open('models\\frozen_lake.sarsa', 'wb') as fp:

pickle.dump(model, fp)

# Print the score

print()

print('--- Evaluation ---')

print ('Score: {0} / {1}'.format(total_score, episodes))

print()

# Print model

print('--- Model (Q-table) ---')

print(model)

print()

# Evaluate a model

def evaluate():

# Variables

episodes = 10

timesteps = 200

total_score = 0

# Create an environment

env = gym.make('FrozenLake8x8-v0', is_slippery=True)

# Get a model

model = get_model(env)

# Loop episodes

for episode in range(episodes):

# Start episode and get initial observation

state = env.reset()

# Reset score

score = 0

# Loop timesteps

for t in range(timesteps):

# Get an action (0:Left, 1:Down, 2:Right, 3:Up)

action = np.argmax(model[state])

# Perform a step

state, reward, done, info = env.step(action)

# Update score

score += reward

total_score += reward

# Check if we are done (game over)

if done:

# Render the map

print('--- Episode {0} ---'.format(episode+1))

env.render(mode='human')

print('Score: {0}, Timesteps: {1}'.format(score, t+1))

print()

break

# Close the environment

env.close()

# Print the score

print('--- Evaluation ---')

print ('Score: {0} / {1}'.format(total_score, episodes))

print()

# The main entry point for this module

def main():

# Train the model

train()

# Evaluate the model

evaluate()

# Tell python to run main method

if __name__ == "__main__": main()Training

Episode 895, Score: 0.0, Timesteps: 104, Epsilon: 0.1

Episode 896, Score: 1.0, Timesteps: 92, Epsilon: 0.1

Episode 897, Score: 0.0, Timesteps: 15, Epsilon: 0.1

Episode 898, Score: 1.0, Timesteps: 38, Epsilon: 0.1

Episode 899, Score: 1.0, Timesteps: 126, Epsilon: 0.1

Episode 900, Score: 0.0, Timesteps: 19, Epsilon: 0.1

Episode 901, Score: 0.0, Timesteps: 129, Epsilon: 0.1

Episode 902, Score: 0.0, Timesteps: 125, Epsilon: 0.1

Episode 903, Score: 1.0, Timesteps: 126, Epsilon: 0.1

Episode 904, Score: 0.0, Timesteps: 107, Epsilon: 0.1

Episode 905, Score: 0.0, Timesteps: 59, Epsilon: 0.1

Episode 906, Score: 0.0, Timesteps: 11, Epsilon: 0.1

Episode 907, Score: 1.0, Timesteps: 28, Epsilon: 0.1

Episode 908, Score: 0.0, Timesteps: 40, Epsilon: 0.1

Episode 909, Score: 0.0, Timesteps: 103, Epsilon: 0.1

Episode 910, Score: 1.0, Timesteps: 144, Epsilon: 0.1

Episode 911, Score: 1.0, Timesteps: 82, Epsilon: 0.1

Episode 912, Score: 1.0, Timesteps: 132, Epsilon: 0.1

Episode 913, Score: 1.0, Timesteps: 68, Epsilon: 0.1

Episode 914, Score: 0.0, Timesteps: 147, Epsilon: 0.1

Episode 915, Score: 1.0, Timesteps: 61, Epsilon: 0.1

Episode 916, Score: 1.0, Timesteps: 130, Epsilon: 0.1

Episode 917, Score: 1.0, Timesteps: 48, Epsilon: 0.1

Episode 918, Score: 0.0, Timesteps: 159, Epsilon: 0.1

Episode 919, Score: 1.0, Timesteps: 83, Epsilon: 0.1

Episode 920, Score: 0.0, Timesteps: 62, Epsilon: 0.1

Episode 921, Score: 0.0, Timesteps: 100, Epsilon: 0.1

Episode 922, Score: 1.0, Timesteps: 188, Epsilon: 0.1

Episode 923, Score: 0.0, Timesteps: 20, Epsilon: 0.1

Episode 924, Score: 1.0, Timesteps: 106, Epsilon: 0.1

Episode 925, Score: 0.0, Timesteps: 82, Epsilon: 0.1

Episode 926, Score: 0.0, Timesteps: 200, Epsilon: 0.1

Episode 927, Score: 0.0, Timesteps: 58, Epsilon: 0.1

Episode 928, Score: 1.0, Timesteps: 192, Epsilon: 0.1

Episode 929, Score: 1.0, Timesteps: 53, Epsilon: 0.1

Episode 930, Score: 0.0, Timesteps: 136, Epsilon: 0.1

Episode 931, Score: 0.0, Timesteps: 128, Epsilon: 0.1

Episode 932, Score: 1.0, Timesteps: 61, Epsilon: 0.1

Episode 933, Score: 0.0, Timesteps: 40, Epsilon: 0.1

Episode 934, Score: 1.0, Timesteps: 81, Epsilon: 0.1

Episode 935, Score: 0.0, Timesteps: 50, Epsilon: 0.1

Episode 936, Score: 1.0, Timesteps: 33, Epsilon: 0.1

Episode 937, Score: 0.0, Timesteps: 30, Epsilon: 0.1

Episode 938, Score: 0.0, Timesteps: 72, Epsilon: 0.1

Episode 939, Score: 1.0, Timesteps: 65, Epsilon: 0.1

Episode 940, Score: 0.0, Timesteps: 48, Epsilon: 0.1

Episode 941, Score: 1.0, Timesteps: 101, Epsilon: 0.1

Episode 942, Score: 0.0, Timesteps: 145, Epsilon: 0.1

Episode 943, Score: 0.0, Timesteps: 74, Epsilon: 0.1

Episode 944, Score: 1.0, Timesteps: 91, Epsilon: 0.1

Episode 945, Score: 1.0, Timesteps: 117, Epsilon: 0.1

Episode 946, Score: 0.0, Timesteps: 80, Epsilon: 0.1

Episode 947, Score: 0.0, Timesteps: 71, Epsilon: 0.1

Episode 948, Score: 0.0, Timesteps: 68, Epsilon: 0.1

Episode 949, Score: 1.0, Timesteps: 40, Epsilon: 0.1

Episode 950, Score: 1.0, Timesteps: 138, Epsilon: 0.1

Episode 951, Score: 1.0, Timesteps: 52, Epsilon: 0.1

Episode 952, Score: 0.0, Timesteps: 71, Epsilon: 0.1

Episode 953, Score: 0.0, Timesteps: 75, Epsilon: 0.1

Episode 954, Score: 0.0, Timesteps: 20, Epsilon: 0.1

Episode 955, Score: 0.0, Timesteps: 74, Epsilon: 0.1

Episode 956, Score: 0.0, Timesteps: 17, Epsilon: 0.1

Episode 957, Score: 0.0, Timesteps: 42, Epsilon: 0.1

Episode 958, Score: 1.0, Timesteps: 35, Epsilon: 0.1

Episode 959, Score: 1.0, Timesteps: 71, Epsilon: 0.1

Episode 960, Score: 0.0, Timesteps: 46, Epsilon: 0.1

Episode 961, Score: 0.0, Timesteps: 15, Epsilon: 0.1

Episode 962, Score: 1.0, Timesteps: 42, Epsilon: 0.1

Episode 963, Score: 0.0, Timesteps: 87, Epsilon: 0.1

Episode 964, Score: 0.0, Timesteps: 35, Epsilon: 0.1

Episode 965, Score: 0.0, Timesteps: 89, Epsilon: 0.1

Episode 966, Score: 0.0, Timesteps: 34, Epsilon: 0.1

Episode 967, Score: 0.0, Timesteps: 71, Epsilon: 0.1

Episode 968, Score: 0.0, Timesteps: 28, Epsilon: 0.1

Episode 969, Score: 0.0, Timesteps: 45, Epsilon: 0.1

Episode 970, Score: 1.0, Timesteps: 64, Epsilon: 0.1

Episode 971, Score: 0.0, Timesteps: 200, Epsilon: 0.1

Episode 972, Score: 1.0, Timesteps: 89, Epsilon: 0.1

Episode 973, Score: 0.0, Timesteps: 36, Epsilon: 0.1

Episode 974, Score: 0.0, Timesteps: 44, Epsilon: 0.1

Episode 975, Score: 0.0, Timesteps: 86, Epsilon: 0.1

Episode 976, Score: 0.0, Timesteps: 25, Epsilon: 0.1

Episode 977, Score: 0.0, Timesteps: 29, Epsilon: 0.1

Episode 978, Score: 1.0, Timesteps: 51, Epsilon: 0.1

Episode 979, Score: 0.0, Timesteps: 14, Epsilon: 0.1

Episode 980, Score: 0.0, Timesteps: 67, Epsilon: 0.1

Episode 981, Score: 1.0, Timesteps: 64, Epsilon: 0.1

Episode 982, Score: 1.0, Timesteps: 42, Epsilon: 0.1

Episode 983, Score: 1.0, Timesteps: 102, Epsilon: 0.1

Episode 984, Score: 1.0, Timesteps: 45, Epsilon: 0.1

Episode 985, Score: 1.0, Timesteps: 74, Epsilon: 0.1

Episode 986, Score: 0.0, Timesteps: 158, Epsilon: 0.1

Episode 987, Score: 0.0, Timesteps: 33, Epsilon: 0.1

Episode 988, Score: 0.0, Timesteps: 200, Epsilon: 0.1

Episode 989, Score: 1.0, Timesteps: 134, Epsilon: 0.1

Episode 990, Score: 0.0, Timesteps: 60, Epsilon: 0.1

Episode 991, Score: 0.0, Timesteps: 118, Epsilon: 0.1

Episode 992, Score: 0.0, Timesteps: 75, Epsilon: 0.1

Episode 993, Score: 0.0, Timesteps: 28, Epsilon: 0.1

Episode 994, Score: 0.0, Timesteps: 36, Epsilon: 0.1

Episode 995, Score: 1.0, Timesteps: 108, Epsilon: 0.1

Episode 996, Score: 1.0, Timesteps: 167, Epsilon: 0.1

Episode 997, Score: 0.0, Timesteps: 72, Epsilon: 0.1

Episode 998, Score: 1.0, Timesteps: 161, Epsilon: 0.1

Episode 999, Score: 0.0, Timesteps: 20, Epsilon: 0.1

Episode 1000, Score: 0.0, Timesteps: 34, Epsilon: 0.1

--- Evaluation ---

Score: 330.0 / 1000

--- Model (Q-table) ---

[[1.58822434e-02 1.83542753e-02 1.66868140e-02 1.69023887e-02]

[1.79409349e-02 1.88950361e-02 2.59922456e-02 1.85847680e-02]

[2.36208134e-02 2.19922283e-02 3.14218298e-02 2.55790725e-02]

[2.98800128e-02 3.49232197e-02 4.17804445e-02 3.42961358e-02]

[3.49698968e-02 4.23105797e-02 5.52968369e-02 4.69244825e-02]

[5.60323765e-02 5.40534652e-02 6.82347220e-02 5.66681612e-02]

[6.95953605e-02 7.37920969e-02 7.97432752e-02 7.06871250e-02]

[7.78121104e-02 8.05321716e-02 7.87840901e-02 7.33820152e-02]

[1.40025943e-02 1.35864342e-02 1.54138705e-02 1.70385997e-02]

[1.49047978e-02 1.58974876e-02 1.66414128e-02 1.95952317e-02]

[2.06635637e-02 2.11896984e-02 2.14088874e-02 2.65774700e-02]

[2.12005520e-02 2.13054825e-02 1.99610922e-02 3.50770280e-02]

[3.35060911e-02 4.45102926e-02 3.49668859e-02 3.62917935e-02]

[4.45862331e-02 5.65520299e-02 7.04236101e-02 5.38877418e-02]

[7.73476289e-02 8.46758064e-02 8.88957549e-02 7.97801518e-02]

[8.81340677e-02 9.54217586e-02 8.43980436e-02 8.12543863e-02]

[3.87344841e-03 4.03381657e-03 6.19510965e-03 1.44182728e-02]

[5.30553404e-03 4.21520872e-03 7.75274786e-03 1.41337472e-02]

[1.13591113e-02 1.74026972e-03 2.22417192e-03 4.22445654e-03]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[2.27253875e-02 1.69603540e-02 3.85779399e-02 3.35287190e-02]

[2.03989065e-02 3.74362522e-02 4.88730301e-02 6.65208777e-02]

[8.61303641e-02 8.72819163e-02 1.07443446e-01 9.82719998e-02]

[1.05038640e-01 1.19186834e-01 1.22271005e-01 1.06191074e-01]

[2.23297129e-03 2.00029401e-03 2.77216991e-03 7.94955022e-03]

[8.10664415e-03 2.27907479e-03 2.64391333e-03 2.48198272e-03]

[2.94566767e-03 2.09542586e-03 2.51435984e-03 8.21980716e-03]

[1.21590709e-03 4.11632866e-03 2.32949660e-03 9.51239071e-03]

[2.19847726e-02 4.27582672e-03 1.48586603e-02 6.99846753e-03]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[9.10808317e-02 9.31422088e-02 1.30674856e-01 8.89284563e-02]

[1.40985145e-01 1.62682619e-01 1.79528807e-01 1.30110380e-01]

[1.15762684e-03 7.26653944e-04 1.35847036e-03 3.00219897e-03]

[1.15773368e-03 1.28508461e-03 5.23269995e-04 2.95228654e-03]

[2.04631738e-03 8.44853756e-04 3.15384675e-04 4.14649578e-04]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[9.61247006e-03 2.77914396e-02 2.83902401e-02 3.89492316e-02]

[1.71003877e-02 6.36640945e-02 4.07647828e-02 3.27418306e-02]

[5.70324636e-02 8.44182572e-02 1.13571809e-01 1.31175827e-01]

[2.18134284e-01 2.26573375e-01 2.31307952e-01 2.05702978e-01]

[0.00000000e+00 6.80590744e-06 4.94023446e-04 1.60096079e-03]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 8.46867682e-04 4.37405618e-03 8.19220688e-03]

[3.80198405e-03 6.78539574e-04 1.89507389e-02 2.01498635e-02]

[4.20096828e-02 9.36315281e-03 2.81296469e-02 1.04656804e-02]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[3.27129302e-01 2.51159618e-01 3.77047622e-01 2.37511788e-01]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 3.90852249e-05]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[2.35626869e-03 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[7.02096880e-04 2.52800811e-02 4.11033641e-02 5.73956495e-03]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[4.18710845e-01 4.88118847e-01 5.96790409e-01 3.63097922e-01]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

[5.77085845e-03 2.66688416e-02 5.77854020e-02 2.73056609e-03]

[1.17919238e-02 1.72500867e-01 9.73251261e-03 6.34293721e-02]

[0.00000000e+00 1.00000000e-01 5.97844196e-01 8.26245000e-02]

[0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]]Evaluation

--- Episode 1 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 62

--- Episode 2 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 109

--- Episode 3 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 97

--- Episode 4 ---

(Up)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 0.0, Timesteps: 55

--- Episode 5 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 116

--- Episode 6 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 106

--- Episode 7 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 163

--- Episode 8 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 73

--- Episode 9 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 95

--- Episode 10 ---

(Right)

SFFFFFFF

FFFFFFFF

FFFHFFFF

FFFFFHFF

FFFHFFFF

FHHFFFHF

FHFFHFHF

FFFHFFFG

Score: 1.0, Timesteps: 142

--- Evaluation ---

Score: 9.0 / 10